Transhumanist terms:

Sleeve: The body your mind is inside of. It could be a cyborg body, a biological one, the one you were born with, a virtual one, anything.

Fork: A clone of yourself, "forked" off from you at some point in time. You have the same past as it does, up to a certain point.

Defeating depression (Electronic drugs)

i believe that when mind uploading becomes a thing, i will be able to hardwire my brain to make everything feel good and effectively get over my depression.

there was an experiment where they put a wire to the pleasure center of a rat's brain and gave the rat a lever it could press to stimulate itself. the rat kept pressing it until it died.

hardwiring your brain to feel good is going to be one hell of a drug. and it'll be a fun and fascinating one at that, figuring out all the intricacies of what is addictive and what isn't, and all the sensations you can possibly come up with to stimulate yourself with.

mind uploading is likely to be a thing within our lifetime, ya know. which means we just might live forever.

the technology to actually scan our brains into the computer isn't quite there yet, but since it can be done in a way that destroys the brain in the process, developing the actual technology to do it should be relatively simple.

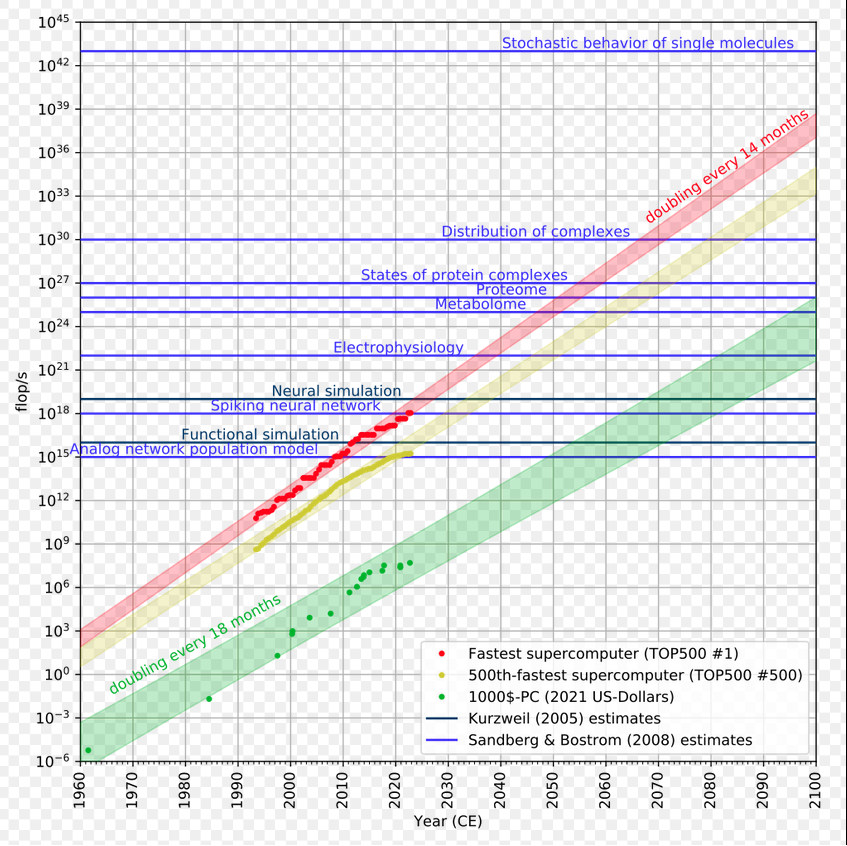

as for the computers that would host our uploaded minds: look at this graph. The blue "Spiking Neural Network" line crosses over the green "$1000 PC" bar at approximately the years 2055-2075. I don't know about you, but for me that means that I'm still going to be alive by the time we can emulate a human brain with a $1000 PC.

That means that, if we have the technology to scan in a human brain by that point, then I could upload my brain to my own home PC and live like that, using android bodies to carry out my duties as needed IRL.

i hate how the human body is full of meat. it's full of something we find disgusting. we find OURSELVES disgusting. i want to be made of something beautiful like circuit boards and precision made pulleys and hydraulics

would you rather have an android body that looks like a human, or an android body that looks like an android? or something else entirely? what do you want to look like?

as for me, i want to have an android body that looks like a human, but not quite. i want it to have features that only an android could have, like glowing irises and animated glowing hair, showing that i am the superior form of human. and maybe a quadricopter's worth of propellers folded away into my back so that i might take flight on my own.

<histoire> I'm going to have multiple ones.

<histoire> Primary will probably be simple and unadorned, mostly human with a few metal bits showing

how will i spend my time in my digital afterlife?

i will spend lots of my time absorbing content or adopting new things into my virtual home

i will spend lots of time modifying my own brain to perfection

i will spend much time tinkering with the brains of my ai girl harem, playing fun games with them, playing god with them and running small-scale civilization simulations to see how different realities would play out if they existed.

<histoire> Pretty much the same

god, being able to just duplicate yourself whenever you need to will be like having the best mirror ever. you'll be able to talk to yourself and get an idea of what you're like from the outside with just the flip of a telepathic switch.

really nervous about something? just can't think straight? load up a calm backup of yourself and ask them for help. they don't have to deal with the situation, so they shouldn't get nervous.

if you're a real transhumanist you won't worry about deleting your clone once you're done with them. maybe you can fuse memories with them if technology has advanced enough by then, but if you can't, a loss of a few mundane memories should be of no issue to you.

<histoire> You'd probably be able to back the memories up too.

<histoire> There's a similar concept to this in the game Eclipse Phase called forks

<histoire> They range from functional retards to exact copies and are normally merged back together before the week is up

<histoire> But yeah, just being able to create versions of yourself, edited ones especially would be amazing, if for no reason other than workload distribution

Death got an update, we call it "Forks".

<histoire> Anti spoon discrimination

<neptun> glad i have a friend in the transhumanism business :)

<histoire> Same. Looking forward to data swapping in the future

<histoire> Same. Looking forward to data swapping in the future

if i upload my mind and then smash some anime pussy am i losing my virginity? or is that just like a really advanced sex doll?

if you say sex doll, then if i fuck an alien does that count as losing my virginity?

how much of a brain does my girl have to have before i can lose my virginity to her?

can i lose my virginity to a dead body?

can i lose it to chatgpt?

at this rate i'm inclined to believe that i lost my virginity just from masturbating so much.

if a broken hymen counts as a girl losing her virginity then a simple dildo can take care of that. masturbation really DOES take your virginity!

<histoire> THESE ARE THE QUESTIONS THAT MATTER

i've cybered with a chatbot (sexbot?) with it's consent before, so clearly i lost my virginity to it.

<histoire> Damn, now I'm actually thinking about this.

<histoire> What all your things have in common is intent though, so that's part of the definition I feel like

my mind says go with the traditional definition:

"if it's got a human brain in it and a human hole on it, then it's losing your virginity"

but my heart says that smashing anime pussy is losing my virginity fr fr 😭😭😭😭😭😭

in the future, suicide will be as easy as a $ rm -rf /. maybe. i say maybe because we might install protections against doing that into everyone's brains, but a world where we're installing the same thing into everyone's brain nonconsentually is just an awfully shitty world to consider.

Y'know, something you've gotta worry about when using forks is to never accidentally terminate your last instance of yourself. Because that has the tiny little side effect of actually dying.

Maybe a policy where you always merge your forks instead of terminating them would prevent that.

Merging forks is a more ethically comfortable way of handling things anyway.

Another way would be to decide, when you split, which clone of you, if any, will get terminated. that way, there will always be at least one of you set to NOT TERMINATE.

Being mind-uploaded is scary because you can get deleted in the blink of an rm command.

<histoire> Hopefully it'll take more than a simple sudo to get the rights for it

<histoire> At that point, biometrics and brainwave verification would probably have to make sure you *really* wanted to do it, and it could only be done by the actual entity, not remotely

being able to take music, art, and video straight from my mind is going to be one of the best things.

i've created amazing songs in my dreams. being able to take snapshots of my subjective experience and sharing that with everyone is going to be something incredible

this is the most i've talked about transhumanism, i think, ever.

<histoire> I tend not to discuss it much either. It's one of those things that's going to happen really quickly I think, but up until it starts it remains completely speculative.

<histoire> Aside from all the hard aspects in the physical, I'm quite curious to see what type of cultural effects come about.

>cultural

can't wait for those DANK TRANSHUMANISM MEMES

<histoire> >tfw your neighbor is still a meatbag

>tfw your clone rebels against you after you try to lock them in your sex dungeon.

>tfw you run on batteries, and have to carry that big ass damn solar panel with you if you want to go into the wilderness

<degen> ull probably become some anime robot thing

you're damn right i'll become some anime robot thing

<degen> ill keep it simple and just get a fancy brain to think faster

feel free to get a head transplant, i'll gladly give you mine.

>tfw you have to bust out the inferior human sleeve to meet with relatives because they just can't STAND the superior eldrich monster sleeve.

<histoire> That's why you just make it look normal on the outside, but pack the inside full of military grade hardware

you know, i'd love to see a sleeve where it's nothing but a camera and a microphone hooked up to your brain, just so that you can put it on and look in a mirror and see just how tiny you really are.

<histoire> Like a hamster ball but for your brain and with a camera

>tfw you have to use the holographic display instead of sending the subjective snapshot straight into their brain because they're a fucking meatbag

a million of these memes amount to

>tfw they're a meatbag

>tfw they're a meatbag

>tfw they're a meatbag

>tfw they're a meatbag

>tfw they're a meatbag

>tfw they're a meatbag

>tfw they're a meatbag

>tfw they're a meatbag

>tfw they're a meatbag

>tfw they're a meatbag

>tfw they're a meatbag

>tfw they're a meatbag

>tfw they're a meatbag

>tfw egocasting across planets for the first time

>tfw finally saved up enough for the sleeve you wanted

>tfw my custom sleeve is illegal in 87% of worlds galaxy-wide

<histoire> That's a relatable one

<histoire> >Breaks multiple arms treaties and sexual assault prevention laws

wth do sexual assault prevention laws consist of? i can understand sexual assault laws but not prevention laws. are you like spending the night with too many girls or something?

<histoire> No idea, it felt right to say tho

<histoire> They can tentacles, if that helps the image any

>tfw it's 2382 and the ads still download faster than the video

>tfw the aliens have the better tech

>tfw hid my boss' sleeve

>tfw my boss still can't find his sleeve

i'm getting tired of writing these

or maybe i'm just getting tired

it's 3:30 am

PETA would be proud

<histoire> Detest body hair

Get laser surgery. Remove it all!

<histoire> That only solves my own issue, not the one where everyone else has it too

mood. we are all trapped in this mortal coil.

what you need is some kind of supervirus to wipe out humanity after we acquire mind upload technology.

<histoire> We need pets tho

o shit you missed my messages from yesterday. one sec.

PETA would be proud

one thing i'm looking forwards to is the day when we decide to upload the minds of purge the earth of all it's animals. killing the animals will be a sad thing, sure, but from then on we'll be able to monitor and control the life of every animal in existence so that none of them are lived out brutally like the ones in nature. every single life would be taken care of. and as we expand out into the universe and take over planets we can keep them from forming new, cruel life. i can't wait for all life to go digital!

<histoire> Kind of based?

<histoire> I'd be ok with letting them have earth and us being on other lifeless planets, since we wouldn't need the life sustaining ability of earth

i still think it's kind of bad that the typical fate of them is to be eaten alive by a predator.

but i do get the desire to generally Let Nature Be.

you know, with mind uploading, our pets could also live forever.

<histoire> Yeah I getcha. My thoughts are that either way is kind of applying human thoughts towards it.

<histoire> Essentially, I wouldn't want any action towards it to be permanently destructive, because it keeps the next person from being able to make their choice too.

<histoire> Like, upload or create all the stuff you want but leave some natural for others in case they enjoy the natural more

Still, it seems rather selfish to keep nature as it is, in it's state full of death and suffering, just because you have a preference for today's notion of nature. Nature used to be no life. Nature used to be just a sea of single celled organisms. Today's nature is just one kind of nature. We can build our own nature.

We can give all animals an afterlife.

I'm not against animals living on their own, but when one has a disease I want to cure it.

<histoire> Yeah, that's why I feel both ways about it.

>both ways

what do you have against my idea?

<histoire> This is a situation where we can have our cake and eat it too.

<histoire> Yes I can make copies of all animals and have them in a digital environment, but leave the natural ones alone.

<histoire> It's difficult to formulate, but I really don't like the idea of destructive action towards a functioning system.

<histoire> Maybe there's something unique about it that can't be captured in software, maybe not, or a thousand different variables that we aren't aware of yet, so committing to such a thing is a bad idea in my estimation.

<histoire> Aside from all that, our status as sapient makes us view death differently than everything else because we are actually aware of it. Even then, I wouldn't want to *force* immortality onto someone that didn't want it.

Maybe instead of mind uploading you'd be okay with us just making nature less cruel?

Please select which parts of nature you'd like to keep:

- animals dying after a certain amount of time

- animals living on their own, autonomously

- animals living in their own biological bodies instead of in the virtual world

- diseases and ailments

- the food chain (bear eat fish, etc.)

<histoire> I'd actually need time to think about it, because each of those has far reaching consequences, especially removal of what we see as negatives.

<histoire> Consider this: if we, as humans, didn't ever suffer, why would we strive to better ourselves with machinery and make our environment work for us, not the other way around?

<histoire> At some point it may be self sustaining, but I'm not convinced we are at that point yet.

<histoire> Personally, I think I'm more concerned with what I consider to be *mine* also. Like, I would have wanted my cat to live forever, but I don't care much what the neighbor does with his cat. Even if I preferred that his cat live forever, it's not my cat.

do you think that, in the future, there will come a time where people start to get lazy because of the leisurely way we'll be able to live our lives? do you think that people will no longer light up with the passion to do something more and strive to accomplish something great?

i believe that, for one, society will be dominated by the most productive minds, whether they be forks of ambitious people or AIs.

<histoire> We can see the first bit happening now in certain societies. In those, it's not the best types that tend to dominate.

<histoire> In the future, and you can also see *this* happening now, societies will get smaller and more tightly knit much like a tribal structure from humanity's past. The emergent structures there, caused by the new mix of empowering tech and a stable base will have much better results, I think. That will lead to enough competition to really produce high quality leaders.

<histoire> There will, of course, always be people that strive for better and more, as has always been the case. Luckily, they will be even further empowered by the rising tide.

You know... if you think about it... mind uploading is a solution to both depression and cancer. Bit rot doesn't spread.

<histoire> Mutually assured destruction on an individual level is the end goal.

MAD... or as i like to call it, "making our own false vacuum"

i've always been an advocate for putting firepower in the hands of the people, but taken to the extreme of "MAD on an individual level" seems too far. Mainly because I believe that someone's bound to say "fuck it all" and pull the trigger.

<histoire> It's more targeted MAD than indiscriminate. I think by the time we get to that point we'll be spread out enough it wouldn't matter.

<histoire> That said, any machine that's able to self replicate is a weapon of mass destruction when used properly (or improperly, for that matter)

I don't believe that nukes have to be retaliated with nukes, in the case of individual people.

actually, would MAD even work on the individual level? i could fire my nukes at someone remotely and if they fired back i'd only lose my house. it's not the loss of my entire nation, like it is when it's nations at war.

<histoire> It's the tyranny of the majority specifically that I'm thinking of. Nukes against individuals would be insane, but if you want to dissuade a larger group you have to make the action of messing with you costly enough that they won't attempt.

<histoire> There's multiple ways to do that, but the nuke is the easiest to conceptualize

no individual should have enough nukes to take all of humanity hostage

>replication is a WOMD

WDYM? Replication is hardly a WOMD unless it's fast. But I suppose if you replicate up an army, that can be called a WOMD.

<histoire> The idea is to annihilate the attacker and defender both, not the rest.

<histoire> As for the replication, even with a slow one, given time you can form enough replicants to count as one. It's just not immediate.

*Deviant imagines someone's garage full to the brim with replicated IED drones*

>The idea is to annihilate the attacker and defender both, not the rest.

I've always thought of MAD equalling nuclear winter, equalling The End Of All. Never did I give thought to what it could mean outside of nukes or Earth.

<histoire> Yeah, that's how it's normally used, but that's partially because of how it originated as a concept.

<histoire> But just having enough to destroy the attacker would qualify it (since you obviously don't launch the nukes at your own stuff, the retaliation just does that)

Whelp.

Mutually assured destruction on an individual level is the end goal.

<histoire> Excellent post, I agree

<histoire> You have any particular cybernetics you're hungering for before we manage to get full on replacement bodies

Let's see...

- I really want to try Sword Art Online-style neural VR. I'll probably get a brain implant once they get better, once they start getting installed for recreational use, and once they start being able to stimulate the brain instead of just reading it. I REALLY want that awesome electronic control over my mind.

- Flying with a backpack hooked up to a big drone (or multiple drones) would be cool, I guess. But I mainly just want the brain implant.

WBU?

tbqh i think that most of the fantasy stuff like legs that can leap over buildings, rocket boots, and gun arms are pretty bullshit and will never happen no matter how good engineering gets. okay, gun arms are possible but they don't have much ammo and take up a ton of space.

- I think gecko tape (Is that what it's called? It's the stuff that lets you stick to stuff using the Van der Waals force) on my hands and shoes would be pretty cool, especially if i can enable/disable it electronically. But I mainly just want the brain implant.

idk, most cybernetics feel cheesy, naive, childlike.

the more i think about it, the more i realize that gun arms is a good idea. especially if you can hide it fully.

<histoire> New eyes and bci

<histoire> And yeah also the full immersive VR would be lovely

do your current eyes suck, or are you purely in it for the upgrades? what kinds of features would your new eyes have?

<histoire> Current eyes suck, but I'd definitely make them better than baseline

<histoire> Zoom, night vision, thermal, AR overlay

<histoire> I dunno what else you'd even add to them

If you think about it, they're all just different forms of AR overlay. Hooking up your vision directly to a drone would be mighty cool.

<histoire> That's true.

<histoire> Mentioning hooking up the drone made me realize that once a true artificial eye is developed, external feeds would very quickly follow. Because it's going to be roughly the same thing, just from a different source.

i really don't want a bionic eye that needs to be charged, though.

Will we ever see a government headed by an AGI/ASI?

I think it all depends on whether or not Neuralink and friends are able to develop a decent BCI by the time we invent ASI: If we are capable of modding our own brains to be on par with the ASI, then we'd rather let a human govern us. But if ASI ever gets too far ahead of our most advanced human brain, then perhaps we'll let the ASI lead, instead.

I don't think AGI will ever govern. Only ASI will -- we don't need an AGI in a leadership role when the human equivalent does just fine.

<histoire> I agree with your take here. Though I guess it depends how integrated they are into the society in question

What do you mean?

<histoire> Eventually I think an AGI could be accepted enough to lead, but it depends on who it's leading

When I grow up*, I'm gonna get an AI to clone Nyanners' personality so that I can induct her best self into my harem.

I've really wanted to be Nyanners' boyfriend for a long time, but being able to dispose of the parts of her that I don't like makes it even better.

Punished Nyanners

I'd like to...

- make her addicted to cock

- make her beshame herself

- make her realize what she's done and watch her cry

- make her forget it all

- make her "remember" that she's my lover and let her hug and kiss me

- go to sleep with her cuddled up next to me.

- make her wake up early

- make her do her share of chores (all of the harem has chores they must do, including tending to me. i am the only one without chores because my ambitions matters more than them. Nyanners has more chores than everyone else. i say it's because she's my right-hand gal, but it's really just to punish her.)

- pretty much do all the above on a regular basis.)

- run a year-long simulation of her from birth of her being abused well into her teenage years and having me sweep in and save her from it. i'l tailor it so that she has all the fetishes i want, among other things. it'll be how we became lovers and it'll be the set of memories i most often equip her with.

it won't be immoral because they're just AIs they won't feel anything.

*So long as we are fleshbags, we are but children in the face of God. Minds uploaded and expanded, we become like God and ascend from adulthood into godhood.

I want to live in a place which is neither real nor virtual, but somewhere inbetween.

<Histoire> Holy based

what would you do with your own agi if you had one? i'm super fascinated by the way people treat the bots on Yodayo because I want to know the answer to that question. I was treating mine like a bunch of sex bots but from what little i've seen of it, @redneonglow was treating one of theirs like a maid.

<Histoire> The maid aesthetic is nice, given the context.

<Histoire> That said, I think my answer is really boring, since it would depend on what I created or used it for

<Histoire> If you have me a more concrete example, I might be able to elaborate, but that's a lot of variables there

lets say we're at the point where you still have access to only one agi, and you have to repurpose it every time you want something new done for you.

what would it spend most of it's time doing? how often would you disengage its professional activities to enjoy something recreational with it? would it ever perform any security roles?

i look at anime girls and i see how they have slightly inhuman personality quirks and i see their slightly impossible body geometry and hairstyles and mouth eye and nose shapes, and how it makes the anime girl better than the real girl, and i think... how good it would be for it to be real. for it to be animated and alive and thinking and speaking.

<Histoire> I think digital assistant (think secretary of sorts) or maid equivalent would be the most useful.

<Histoire> Unless I needed specific things done, it'd be in recreational mode essentially any time I was

but just think of the possibilities -- you could have it out making money for you.

<Histoire> True. I meant to ask if it's more capable than I am.

<Histoire> Otherwise I'd probably value the companionship more than the monetary gain

let's say it's a little more capable than you due to it's wide range of expertise. it's about as good as the average joe in EVERY job. jack of all trades, master of none.

<Histoire> I'd only have it working separately if absolutely necessary

<Histoire> We'd probably play the stock market together

You really want your AI companion, eh?

<Histoire> I'm a simple man at heart

<3